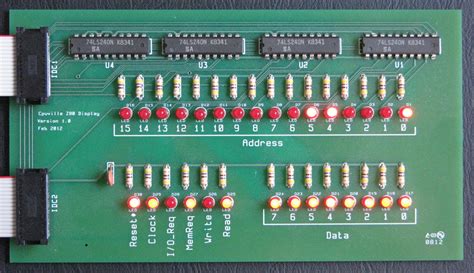

How a Data Bus Works

A data bus consists of a set of parallel electrical conductors that connect the internal components of a computer, such as the CPU, memory, and peripherals. The bus is responsible for carrying data, memory addresses, control signals, and power to the various components.

The data bus works by transmitting data in the form of electrical signals. When a device wants to send data, it places the data on the bus and a control signal indicating that the data is ready to be transmitted. The receiving device then reads the data from the bus and acknowledges receipt of the data.

Types of Data Buses

There are several types of data buses used in modern computing systems, each with its own characteristics and uses:

| Bus Type | Description | Speed | Width |

|---|---|---|---|

| System Bus | Connects CPU, memory, and other core components | High | Wide (32-256 bits) |

| Local Bus | Connects CPU to cache memory and local peripherals | Very High | Wide (32-256 bits) |

| Expansion Bus | Connects peripherals and expansion cards to the system bus | Moderate | Narrow (8-32 bits) |

| Serial Bus | Transfers data one bit at a time | Low to High | N/A |

System Bus

The system bus is the main data pathway in a computer, connecting the CPU to main memory and other core components. It typically consists of a control bus, address bus, and data bus.

The control bus carries control signals that manage the flow of data between components, while the address bus carries memory addresses that indicate where data should be sent or retrieved from. The data bus carries the actual data being transferred.

System buses are designed to handle high-speed data transfer and are usually quite wide, ranging from 32 to 256 bits in modern systems.

Local Bus

A local bus is a high-speed bus that connects the CPU to cache memory and other performance-critical peripherals. Local buses are designed to provide faster data transfer rates than the system bus, as they are directly connected to the CPU.

Examples of local buses include the Front Side Bus (FSB) used in older Intel systems and the HyperTransport bus used in some AMD systems.

Expansion Bus

An expansion bus is a bus that allows additional peripherals and expansion cards to be connected to the system bus. Expansion buses are typically slower and narrower than the system bus, as they are designed for less performance-critical devices.

Common expansion buses include PCI (Peripheral Component Interconnect), PCI Express (PCIe), and AGP (Accelerated Graphics Port).

Serial Bus

A serial bus transfers data one bit at a time over a single conductor. Serial buses are typically used for lower-bandwidth devices such as keyboards, mice, and printers.

Examples of serial buses include USB (Universal Serial Bus), FireWire, and I2C (Inter-Integrated Circuit).

Bus Width and Speed

The width and speed of a data bus are crucial factors in determining its performance. The width of a bus refers to the number of bits that can be transferred simultaneously, while the speed refers to how quickly data can be transmitted.

Wider buses can transfer more data per clock cycle, leading to higher overall bandwidth. For example, a 64-bit bus can transfer twice as much data per clock cycle as a 32-bit bus.

Bus speed is measured in MHz (megahertz) or GT/s (gigatransfers per second) and indicates how many data transfers can occur per second. Higher bus speeds allow for faster data transfer rates.

Modern high-performance systems often use wide, high-speed buses to ensure optimal data transfer performance. For instance, DDR4 SDRAM uses a 64-bit wide bus and can operate at speeds up to 3200 MT/s (megatransfers per second).

Importance of Data Bus in Computer Performance

The performance of a computer system is heavily dependent on the efficiency of its data bus. A well-designed data bus allows for fast, seamless communication between components, while a poorly designed bus can lead to bottlenecks and reduced performance.

Bandwidth and Throughput

Bandwidth and throughput are two key metrics for assessing data bus performance. Bandwidth refers to the maximum amount of data that can be transferred over the bus in a given time period, typically measured in bytes per second (B/s) or bits per second (bps).

Throughput, on the other hand, refers to the actual amount of data being transferred at any given moment. Throughput can be affected by factors such as bus contention, latency, and overhead.

A high-bandwidth bus with efficient throughput is essential for ensuring optimal system performance, particularly in data-intensive applications such as gaming, video editing, and scientific computing.

Latency and Clock Speed

Latency is another important factor in data bus performance. Latency refers to the time delay between when a data transfer is initiated and when it is completed. Lower latency means faster data transfer and better overall system responsiveness.

Clock speed is closely related to latency, as it determines how quickly data can be transferred across the bus. A higher clock speed means more data transfers can occur per second, reducing latency and improving performance.

However, increasing clock speed also increases power consumption and heat generation, which can lead to stability and reliability issues. As such, designers must carefully balance clock speed with other factors to ensure optimal performance and reliability.

Bus Contention and Arbitration

Bus contention occurs when multiple devices attempt to use the bus simultaneously, leading to delays and reduced performance. To mitigate bus contention, buses use arbitration schemes to determine which device gets priority access to the bus.

Common arbitration schemes include:

- First-Come, First-Served (FCFS): Grants access to the first device that requests it

- Priority-Based: Grants access based on pre-assigned priority levels

- Round-Robin: Grants access to each device in turn, ensuring fair distribution of bus time

Efficient arbitration is crucial for minimizing bus contention and ensuring optimal system performance, particularly in systems with many connected devices.

Enhancements and Innovations in Data Bus Technology

Data bus technology has evolved significantly over the years, with numerous enhancements and innovations aimed at improving performance, efficiency, and reliability.

Pipelining and Burst Mode

Pipelining is a technique that allows multiple data transfers to be initiated before the previous transfers have completed. This overlapping of transfers can significantly improve bus throughput and reduce latency.

Burst mode is a related technique that allows a device to transfer multiple data items in rapid succession without having to re-arbitrate for the bus. This can further improve performance by reducing the overhead associated with bus arbitration.

Multi-Channel and Split Transactions

Multi-channel architectures use multiple parallel buses to increase overall bandwidth and reduce contention. Each channel can operate independently, allowing for simultaneous data transfers on different parts of the system.

Split transactions are another technique for reducing bus contention. With split transactions, a device can initiate a data transfer and then release the bus while waiting for the data to become available. This allows other devices to use the bus in the meantime, improving overall system performance.

Intelligent Bus Interfaces

Intelligent bus interfaces are hardware components that manage communication between devices and the bus. These interfaces can perform various functions, such as:

- Buffering data to minimize latency

- Performing protocol translation to enable communication between different bus types

- Implementing error detection and correction to improve reliability

- Providing advanced power management features to reduce energy consumption

Intelligent bus interfaces play a crucial role in modern computing systems, enabling efficient, reliable communication between diverse components and buses.

Future Trends in Data Bus Technology

As computing systems continue to evolve, so too will data bus technology. Some of the key trends and developments to watch in the coming years include:

Optical Interconnects

Optical interconnects use light to transmit data, offering several advantages over traditional electrical buses:

- Higher bandwidth and lower latency due to the high speed of light

- Reduced power consumption and heat generation

- Improved signal integrity and noise immunity

While optical interconnects are currently used primarily in high-performance computing and data center environments, they are expected to become more prevalent in mainstream systems as the technology matures and costs decrease.

Advanced Packaging Technologies

Advanced packaging technologies, such as 2.5D and 3D integration, allow for closer integration of components and shorter interconnects. This can significantly improve data bus performance by reducing latency and increasing bandwidth.

In a 2.5D package, multiple chips are mounted on a single interposer layer, enabling high-density interconnects between the chips. In a 3D package, chips are stacked vertically and connected using through-silicon vias (TSVs), further increasing interconnect density and performance.

Neuromorphic and Quantum Computing

Neuromorphic computing seeks to emulate the structure and function of biological neural networks in hardware. These systems often use specialized data buses optimized for the massively parallel, event-driven communication patterns found in neural networks.

Quantum computing uses quantum bits (qubits) to perform computations, enabling the solution of certain problems much faster than classical computers. Quantum systems require specialized data buses capable of transferring quantum information between qubits and classical control circuitry.

As these emerging computing paradigms mature, they will likely drive further innovations in data bus technology to support their unique requirements.

FAQ

What is the difference between a data bus and an address bus?

A data bus carries the actual data being transferred between components, while an address bus carries memory addresses that indicate where data should be sent or retrieved from.

How does bus width affect performance?

Wider buses can transfer more data per clock cycle, leading to higher overall bandwidth. For example, a 64-bit bus can transfer twice as much data per clock cycle as a 32-bit bus.

What is bus contention, and how can it be mitigated?

Bus contention occurs when multiple devices attempt to use the bus simultaneously, leading to delays and reduced performance. To mitigate bus contention, buses use arbitration schemes such as first-come, first-served (FCFS), priority-based, or round-robin to determine which device gets priority access to the bus.

What are the advantages of optical interconnects over electrical buses?

Optical interconnects offer several advantages over electrical buses, including higher bandwidth, lower latency, reduced power consumption and heat generation, and improved signal integrity and noise immunity.

How do advanced packaging technologies improve data bus performance?

Advanced packaging technologies, such as 2.5D and 3D integration, allow for closer integration of components and shorter interconnects. This can significantly improve data bus performance by reducing latency and increasing bandwidth.